Getting Started

Calyx is an intermediate language and infrastructure for building compilers that generate custom hardware accelerators. These instructions will help you set up the Calyx compiler and associated tools. By the end, you should be able to compile and simulate hardware designs generated by Calyx.

Compiler Installation

There are three possible ways to install Calyx, depending on your goals.

Using Docker

The easiest way is to use the Calyx Docker image that provides a pinned version of the compiler, all frontends, as well as configuration for several tools.

The following commands will fetch the Docker image and start a container with an interactive shell:

docker run -it --rm ghcr.io/calyxir/calyx:latest

The --rm flag will remove the container after you exit the shell. If you want to keep the container around, remove the flag.

You can skip forward to running a hardware design.

Installing the Crate (to use, but not extend, Calyx)

First, install Rust.

This should automatically install cargo.

If you want just to play with the compiler, install the calyx crate:

cargo install calyx

This will install the calyx binary which can optimize and compile Calyx programs. You will still need the primitives/core.futil and its accompanying Verilog file library to compile most programs.

Installing from Source (to use and extend Calyx)

First, install Rust.

This should automatically install cargo.

Clone the repository:

git clone https://github.com/calyxir/calyx.git

Then build the compiler:

cargo build

You can build and run the compiler with:

cargo build # Builds the compiler

./target/debug/calyx --help # Executes the compiler binary

We recommend installing the git hooks to run linting and formatting checks before each commit:

/bin/sh setup_hooks.sh

You can build the docs by installing mdbook and the callouts preprocessor:

/bin/sh docs/install_tools.sh

Then, run mdbook serve from the project root.

Running Core Tests

The core test suite tests the Calyx compiler’s various passes. Install the following tools:

- runt hosts our testing infrastructure. Install with:

cargo install runt - jq is a command-line JSON processor. Install with:

- Ubuntu:

sudo apt install jq - Mac:

brew install jq - Other platforms: JQ installation

- Ubuntu:

Build the compiler:

cargo build

Then run the core tests with:

runt -i core

If everything has been installed correctly, this should not produce any failing tests.

Installing the Command-Line Driver

The Calyx driver, called fud2, wraps the various compiler frontends and backends to simplify running Calyx programs. Start at the root of the repository.

Install fud2 using Cargo:

cargo install --path fud2

fud2 requires Ninja and uv, so install those if you don’t already have them.

For example, use brew install ninja uv on macOS or apt-get install ninja-build followed by curl -LsSf https://astral.sh/uv/install.sh | sh on Debian/Ubuntu.

Configure fud2 by typing:

fud2 edit-config

And put this in the resulting TOML file:

[calyx]

base = "<absolute path to Calyx repository>"

Finally, use this to set up fud2’s Python environment:

fud2 env init

You can read [more about setting up and using fud2][fud2] if you’re curious.

Simulation

There are three ways to run Calyx programs: Verilator, Icarus Verilog, and Calyx’s native interpreter. You’ll want to set up at least one of these options so you can try out your code.

Icarus Verilog is an easy way to get started on most platforms.

On a Mac, you can install it via Homebrew by typing brew install icarus-verilog.

On Ubuntu, install from source.

It is worth saying a little about the alternatives. You could consider:

- Setting up Verilator for faster performance, which is good for long-running simulations.

- Using the interpreter to avoid RTL simulation altogether.

Running a Hardware Design

You’re all set to run a Calyx hardware design now. Run the following command:

fud2 examples/tutorial/language-tutorial-iterate.futil \

-s sim.data=examples/tutorial/data.json \

--to dat --through icarus

(Change the last bit to --through verilator to use Verilator instead.)

This command will compile examples/tutorial/language-tutorial-iterate.futil to Verilog

using the Calyx compiler, simulate the design using the data in examples/tutorial/data.json, and generate a JSON representation of the

final memory state.

Congratulations! You’ve simulated your first hardware design with Calyx.

Where to go next?

- How can I setup syntax highlighting in my editor?

- How does the language work?

- Where can I see further examples with

fud2? - How do I write a frontend for Calyx?

Calyx Language Tutorial

This tutorial will familiarize you with the Calyx language by writing a minimal program by hand. Usually, Calyx code will be generated by a frontend. However, by writing the program by hand, you can get familiar with all the basic constructs you need to generate Calyx yourself!

Complete code for each example can be found in the tutorial directory in the Calyx repository.

Get Started

The basic building block of Calyx programs is a component which corresponds to hardware modules (or function definitions for the software-minded).

Here is an empty component definition for main along with an import

statement to import the standard library:

import "primitives/core.futil";

component main(@go go: 1) -> (@done done: 1) {

cells {}

wires {}

control {}

}

Put this in a file—you can call it language-tutorial-mem.futil, for example.

(The futil file extension comes from an old name for Calyx.)

You can think of a component as a unit of Calyx code roughly analogous to a function: it encapsulates a logical unit of hardware structures along with their control. Every component definition has three sections:

cells: The hardware subcomponents that make up this component.wires: A set of guarded connections between components, possibly organized into groups.control: The imperative program that defines the component’s execution schedule: i.e., when each group executes.

We’ll fill these sections up minimally in the next sections.

A Memory Cell

Let’s turn our skeleton into a tiny, nearly no-op Calyx program. We’ll start by adding a memory component to the cells:

cells {

@external mem = comb_mem_d1(32, 1, 1);

}

This new line declares a new cell called mem and the primitive component comb_mem_d1 represents a 1D memory.

You can see the definition of comb_mem_d1, and all the other standard components, in the primitives/core.futil library we imported.

This one has three parameters: the data width (here, 32 bits), the number of elements (just one), and the width of the address port (one bit).

The @external syntax is an extra bit of magic that allows us

to read and write to the memory.

Next, we’ll add some assignments to the wires section to update the value in

the memory.

Insert these lines to put a constant value into the memory:

wires {

mem.addr0 = 1'b0;

mem.write_data = 32'd42;

mem.write_en = 1'b1;

done = mem.done;

}

These assignments refer to four ports on the memory component:

addr0 (the address port),

write_data (the value we’re putting into the memory),

write_en (the write enable signal, telling the memory that it’s time to do a write), and

done (signals that the write was committed).

Constants like 32'd42 are Verilog-like literals that include the bit width (32), the base (d for decimal), and the value (42).

Assignments at the top level in the wires section, like these, are “continuous”.

They always happen, without any need for control statements to orchestrate them.

We’ll see later how to organize assignments into groups.

The complete program for this section is available under examples/tutorial/language-tutorial-mem.futil.

Compile & Run

We can almost run this program!

But first, we need to provide it with data.

The Calyx infrastructure can provide data to programs from JSON files.

So make a file called something like data.json containing something along these lines:

{

"mem": {

"data": [10],

"format": {

"numeric_type": "bitnum",

"is_signed": false,

"width": 32

}

}

}

The mem key means we’re providing the initial value for our memory called mem.

We have one (unsigned integer) data element, and we indicate the bit width (32 bits).

If you want to see how this Calyx program compiles to Verilog, here’s the fud incantation you need:

fud2 language-tutorial-mem.futil --to verilog

Not terribly interesting! However, one nice thing you can do with programs is execute them.

To run our program using Icarus Verilog, do this:

fud2 language-tutorial-mem.futil --to dat --through icarus \

-s sim.data=data.json

Using --to dat asks fud to run the program, and the extra -s verilog.data <filename> argument tells it where to find the input data.

The --through icarus-verilog option tells fud which Verilog simulator to use (see the chapter about fud for alternatives such as Verilator).

Executing this program should print:

{

"cycles": 1,

"memories": {

"mem": [

42

]

}

}

Meaning that, after the program finished, the final value in our memory was 42.

Note: Verilator may report a different cycle count compared to the one above. As long as the final value in memory is correct, this does not matter.

Add Control

Let’s change our program to use an execution schedule.

First, we’re going to wrap all the assignments in the wires section into a

name group:

wires {

group the_answer {

mem.addr0 = 1'b0;

mem.write_data = 32'd42;

mem.write_en = 1'b1;

the_answer[done] = mem.done;

}

}

We also need one extra line in the group: that assignment to the_answer[done].

Here, we say that the_answer’s work is done once the update to mem has finished.

Calyx groups have compilation holes called go and done that the control program will use to orchestrate their execution.

The last thing we need is a control program.

Add one line to activate the_answer and then finish:

control {

the_answer;

}

If you execute this program, it should do the same thing as the original group-free version: mem ends up with 42 in it.

But now we’re controlling things with an execution schedule.

If you’re curious to see how the Calyx compiler lowers this program to a Verilog-like structural form of Calyx, you can do this:

calyx language-tutorial-mem.futil

Notably, you’ll see control {} in the output, meaning that the compiler has eliminated all the control statements and replaced them with continuous assignments in wires.

The complete program for this section is available under examples/tutorial/language-tutorial-control.futil.

Add an Adder

The next step is to actually do some computation. In this version of the program, we’ll read a value from the memory, increment it, and store the updated value back to the memory.

First, we will add two components to the cells section:

cells {

@external(1) mem = comb_mem_d1(32, 1, 1);

val = std_reg(32);

add = std_add(32);

}

We make a register val and an integer adder add, both configured to work on 32-bit values.

Next, we’ll create three groups in the wires section for the three steps we want to run: read, increment, and write back to the memory.

Let’s start with the last step, which looks pretty similar to our the_answer group from above, except that the value comes from the val register instead of a constant:

group write {

mem.addr0 = 1'b0;

mem.write_en = 1'b1;

mem.write_data = val.out;

write[done] = mem.done;

}

Next, let’s create a group read that moves the value from the memory to our register val:

group read {

mem.addr0 = 1'b0;

val.in = mem.read_data;

val.write_en = 1'b1;

read[done] = val.done;

}

Here, we use the memory’s read_data port to get the initial value out.

Finally, we need a third group to add and update the value in the register:

group upd {

add.left = val.out;

add.right = 32'd4;

val.in = add.out;

val.write_en = 1'b1;

upd[done] = val.done;

}

The std_add component from the standard library has two input ports, left and right, and a single output port, out, which we hook up to the register’s in port.

This group adds a constant 4 to the register’s value, updating it in place.

We can enable the val register with a constant 1 because the std_add component is combinational, meaning its results are ready “instantly” without the need to wait for a done signal.

We need to extend our control program to orchestrate the execution of the three groups.

We will need a seq statement to say we want to the three steps sequentially:

control {

seq {

read;

upd;

write;

}

}

Try running this program again. The memory’s initial value was 10, and its final value after execution should be 14.

The complete program for this section is available under examples/tutorial/language-tutorial-compute.futil.

Iterate

Next, we’d like to run our little computation in a loop.

The idea is to use Calyx’s while control construct, which works like this:

while <value> with <group> {

<body>

}

A while loop runs the control statements in the body until <value>, which is some port on some component, becomes zero.

The with <group> bit means that we activate a given group in order to compute the condition value that determines whether the loop continues executing.

Let’s run our memory-updating seq block in a while loop.

Change the control program to look like this:

control {

seq {

init;

while lt.out with cond {

par {

seq {

read;

upd;

write;

}

incr;

}

}

}

}

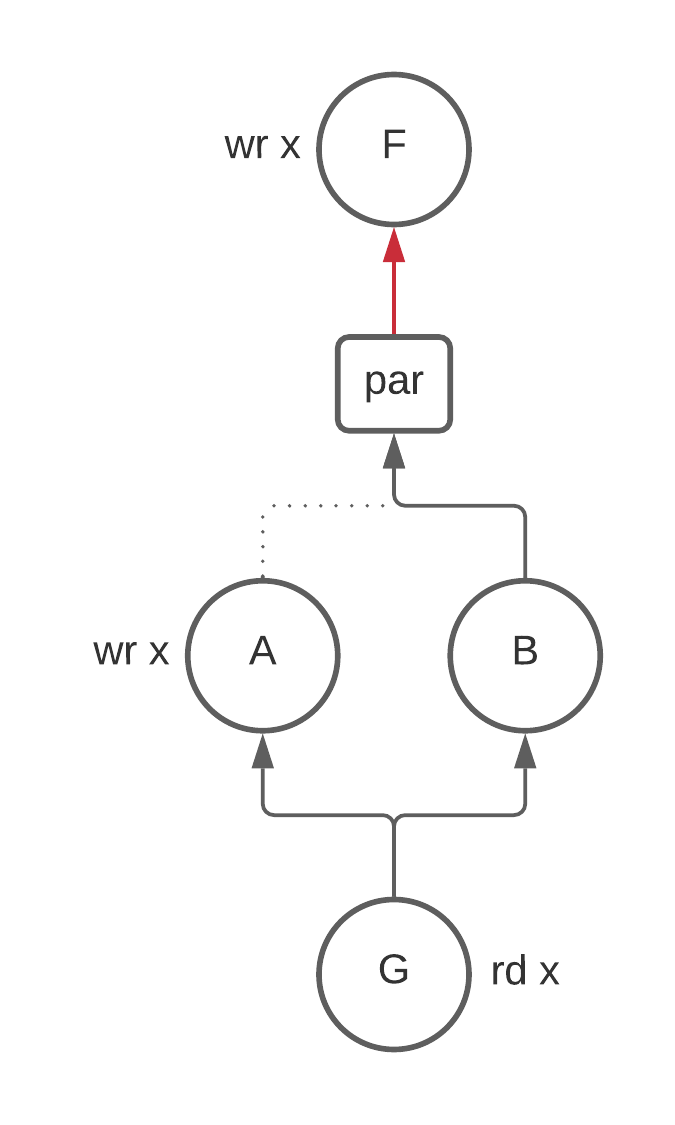

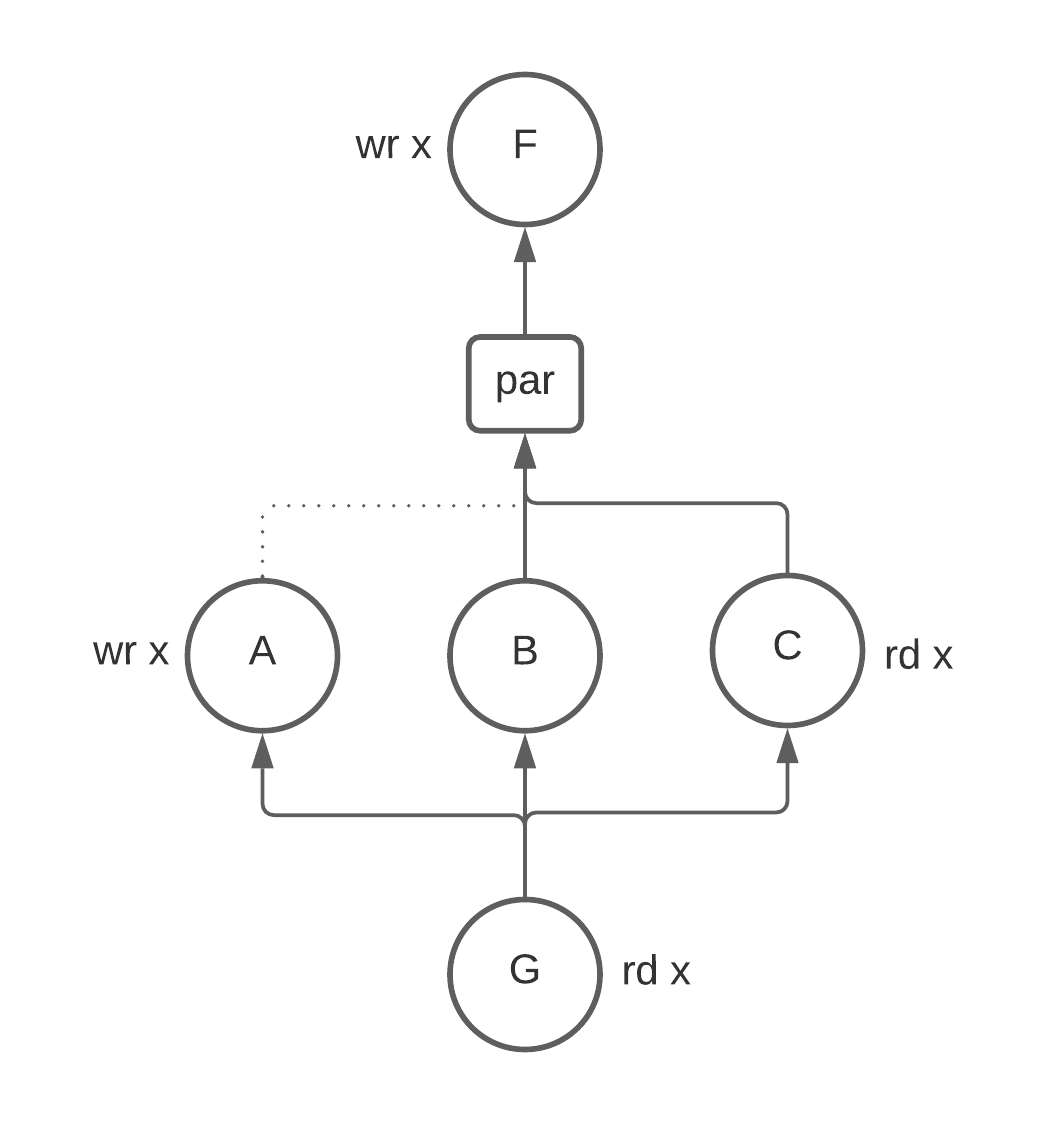

This version uses while, the parallel composition construct par, and a few new groups we will need to define.

The idea is that we’ll use a counter register to make this loop run a fixed number of times, like a for loop.

First, we have an outer seq that invokes an init group that we will write to set the counter to zero.

The while loop then uses a new group cond, and it will run while a signal lt.out remains nonzero: this signal will compute counter < 8.

The body of the loop runs our old seq block in parallel with a new incr group to increment the counter.

Let’s add some cells to our component:

counter = std_reg(32);

add2 = std_add(32);

lt = std_lt(32);

We’ll need a new register, an adder to do the incrementing, and a less-than comparator.

We can use these raw materials to build the new groups we need: init, incr, and cond.

First, the init group is pretty simple:

group init {

counter.in = 32'd0;

counter.write_en = 1'b1;

init[done] = counter.done;

}

This group just writes a zero into the counter and signals that it’s done.

Next, the incr group adds one to the value in counter using add2:

group incr {

add2.left = counter.out;

add2.right = 32'd1;

counter.in = add2.out;

counter.write_en = 1'b1;

incr[done] = counter.done;

}

And finally, cond uses our comparator lt to compute the signal we need for

our while loop. We use a comb group to denote that the assignments inside

the condition can be run combinationally:

comb group cond {

lt.left = counter.out;

lt.right = 32'd8;

}

By comparing with 8, we should now be running our loop body 8 times.

Try running this program again. The output should be the result of adding 4 to the initial value 8 times, so 10 + 8 × 4.

The complete program for this section is available under examples/tutorial/language-tutorial-iterate.futil.

Take a look at the full language reference for details on the complete language.

Multi-Component Designs

Calyx designs can define and instantiate other Calyx components that themselves

encode complex control programs.

As an example, we’ll build a Calyx design that uses a simple Calyx component to save a value in a register and use it in a different component.

We define a new component called identity that has an input port in

and an output port out.

component identity(in: 32) -> (out: 32) {

cells {

r = std_reg(32);

}

wires {

group save {

r.in = in;

r.write_en = 1'd1;

save[done] = r.done;

}

// This component always outputs the current value in r

out = r.out;

}

control {

save;

}

}

The following line defines a continuous assignment, i.e., an assignment

that is always kept active, regardless of the component’s control program

being active.

// This component always outputs the current value in r

out = r.out;

By defining this continuous assignment, we can execute our component and later observe any relevant values.

Next, we can instantiate this component in any other Calyx component.

The following Calyx program instantiates the id component and uses it to

save a value and observe it.

component main() -> () {

cells {

// Instantiate the identity element

id = identity();

current_value = std_reg(32);

}

wires {

group run_id {

// We want to "save" the value 10 inside the identity group.

id.in = 32'd10;

// All components have a magic "go" and "done" port added to them.

// Execute the component.

id.go = 1'd1;

run_id[done] = id.done;

}

group use_id {

// We want to "observe" the current value saved in id.

// The out port on the `id` component always shows the last saved

// element. We don't need to set the `go` because we're not executing

// and control.

current_value.in = id.out;

current_value.write_en = 1'd1;

use_id[done] = current_value.done;

}

}

control {

seq { run_id; use_id; }

}

}

Our first group executes the component by setting the go signal for the

component to high and placing the value 10 on the input port.

The second group simply saves the value on the output port. Importantly,

we don’t have to set the go signal of the component to high because we

don’t need to save a new value into it.

The component executes the two groups in-order.

To see the output from running this component, run the command:

fud2 examples/futil/multi-component.futil --to vcd

Passing Cells by Reference

One question that may arise when using Calyx as a backend is how to pass a cell “by reference” between components. In C++, this might look like:

#include <array>

#include <cstdint>

// Adds one to the first element in `v`.

void add_one(std::array<uint32_t, 1>& v) {

v[0] = v[0] + 1;

}

int main() {

std::array<uint32_t, 1> x = { 0 };

add_one(x); // The value at x[0] is now 1.

}

In Calyx, there are two steps to passing a cell by reference:

- Define the component in a manner such that it can accept a cell by reference.

- Pass the desired cell by reference.

When we say cell, we mean any cell, including memories of various dimensions and registers.

The language provides two ways of doing this.

The Easy Way: ref Cells

Calyx uses the ref keyword to describe cells that are passed by reference:

component add_one() -> () {

cells {

ref mem = comb_mem_d1(32, 4, 3); // A memory passed by reference.

...

}

...

}

This component defines mem as a memory that is passed by reference to the component.

Inside the component, we can use the cell as usual.

Next, to pass the memory to the component, we use the invoke syntax:

component add_one() -> () { ... }

component main() -> () {

cells {

A = comb_mem_d1(32, 4, 3); // A memory that will be passed by reference.

one = add_one();

...

}

wires { ... }

control {

invoke one[mem = A]()(); // pass A as the `mem` for this invocation.

}

}

The Calyx compiler will correctly lower the add_one component and the invoke call such that the memory is passed by reference.

In fact, any cell can be passed by reference in a Calyx program.

Read the next section if you’re curious about how this process is implemented.

Multiple memories, multiple components

To understand the power of ref cells, let us work through an example.

We will study a relatively simple arbitration logic:

the invoker has six memories of size 4 each, but needs to pretend, sometimes simulatenously, that:

- They are actually two memories of size 12 each.

- They are actually three memories of size 8 each.

We will do up two components that are designed to receive memories by reference:

component wrap2(i: 32, j: 32) -> () {

cells {

// Six memories that will be passed by reference.

ref mem1 = comb_mem_d1(32, 4, 32);

// ...

ref mem6 = comb_mem_d1(32, 4, 32);

// An answer cell, also passed by reference.

ref ans = comb_mem_d1(32, 1, 32);

}

wires { ... }

control { ... }

}

and

component wrap3(i: 32, j: 32) -> () {

cells {

// Six memories that will be passed by reference.

ref mem1 = comb_mem_d1(32, 4, 32);

// ...

ref mem6 = comb_mem_d1(32, 4, 32);

// An answer cell, also passed by reference.

ref ans = comb_mem_d1(32, 1, 32);

}

wires { ... }

control { ... }

}

That is, they have the same signature including input ports, output ports, and ref cells.

We have elided the logic, but feel free to explore the source code in Python. You can also generate the Calyx code by running python calyx-py/test/correctness/arbiter_6.py.

Now the invoker has six locally defined memories. By passing these memories to the components above, the invoker is able to wrap the same six memories two different ways, and then maintain two different fictional indexing systems at the same time.

component main() -> () {

cells {

// Six memories that will pass by reference.

@external A = comb_mem_d1(32, 4, 32);

//...

@external F = comb_mem_d1(32, 4, 32);

// Two answer cells that we will also pass.

@external out2 = comb_mem_d1(32, 1, 32);

@external out3 = comb_mem_d1(32, 1, 32);

// Preparing to invoke the components above.

together2 = wrap2();

together3 = wrap3();

}

wires {

}

control {

seq {

invoke together2[mem1=A, mem2=B, mem3=C, mem4=D, mem5=E, mem6=F, ans=out2](i=32'd1, j=32'd11)();

invoke together3[mem1=A, mem2=B, mem3=C, mem4=D, mem5=E, mem6=F, ans=out3](i=32'd2, j=32'd7)();

}

}

}

Observe: when “wrapped” into two chunks, \( 0 \le i < 2 \) and \( 0 \le j < 12 \); when wrapped into three chunks, \( 0 \le i < 3 \) and \( 0 \le j < 8 \).

The Hard Way: Without ref Cells

Proceed with caution. We recommend using the

refsyntax in almost all cases since it enables the compiler to perform more optimizations.

If we wish not to use ref cells, we can leverage the usual input and output ports to establish a call-by-reference-esque relationship between the calling and called components.

In fact, the Calyx compiler takes ref cells as descibed above and lowers them into code of the style described here.

Let us walk through an example.

Worked example: mem_cpy

In the C++ code above, we’ve constructed an “l-value reference” to the array,

which essentially means we can both read and write from x in the function

add_one.

Now, let’s allow similar functionality at the Calyx IR level.

We define a new component named add_one which represents the function

above. However, we also need to include the correct ports to both read

and write to x:

Read from x | Write to x |

|---|---|

| read_data | done |

| address ports | write_data |

| write_en | |

| address ports |

Since we’re both reading and writing from x, we’ll

include the union of the columns above:

component add_one(x_done: 1, x_read_data: 32) ->

(x_write_data: 32, x_write_en: 1, x_addr0: 1) {

One tricky thing to note is where the ports belong, i.e. should it be

an input port or an output port of the component? The way to reason about this

is to ask whether we want to receive signal from or send signal to the given wire. For example,

with read_data, we will always be receiving signal from it, so it should be an input port.

Conversely, address ports are used to mark where in memory we want to access,

so those are used as output ports.

We then simply use the given ports to both read and write to the memory passed

by reference. Note that we’ve split up the read and write to memory x in separate groups,

to ensure we can schedule them sequentially in the execution flow.

We’re also using the exposed ports of the memory through the component interface rather than,

say, x.write_data.

group read_from_x {

x_addr0 = 1'd0; // Set address port to zero.

tmp_reg.in = x_read_data; // Read the value at address zero.

tmp_reg.write_en = 1'd1;

read_from_x[done] = tmp_reg.done;

}

group write_to_x {

x_addr0 = 1'd0; // Set address port to zero.

add.left = one.out;

add.right = tmp_reg.out; // Saved value from previous read.

x_write_data = add.out; // Write value to address zero.

x_write_en = 1'd1; // Set write enable signal to high.

write_to_x[done] = x_done; // The group is done when the write is complete.

}

Bringing everything back together, the add_one component is written accordingly:

component add_one(x_done: 1, x_read_data: 32) ->

(x_write_data: 32, x_write_en: 1, x_addr0: 1) {

cells {

one = std_const(32, 1);

add = std_add(32);

tmp_reg = std_reg(32);

}

wires {

group read_from_x {

x_addr0 = 1'd0; // Set address port to zero.

tmp_reg.in = x_read_data; // Read the value at address zero.

tmp_reg.write_en = 1'd1;

read_from_x[done] = tmp_reg.done;

}

group write_to_x {

x_addr0 = 1'd0; // Set address port to zero.

add.left = one.out;

add.right = tmp_reg.out; // Saved value from previous read.

x_write_data = add.out; // Write value to address zero.

x_write_en = 1'd1; // Set write enable signal to high.

write_to_x[done] = x_done; // The group is done when the write is complete.

}

}

control {

seq { read_from_x; write_to_x; }

}

}

The final step is creating a main component from which the original component

will be invoked. In this step, it is important to hook up the proper wires in the

call to invoke to the corresponding memory you’d like to read and/or write to:

control {

invoke add_one0(x_done = x.done, x_read_data = x.read_data)

(x_write_data = x.write_data, x_write_en = x.write_en, x_addr0 = x.addr0);

}

This gives us the main component:

component main() -> () {

cells {

add_one0 = add_one();

@external(1) x = comb_mem_d1(32, 1, 1);

}

wires {

}

control {

invoke add_one0(x_done = x.done, x_read_data = x.read_data)

(x_write_data = x.write_data, x_write_en = x.write_en, x_addr0 = x.addr0);

}

}

To see this example simulated, run the command:

fud2 examples/futil/memory-by-reference/memory-by-reference.futil --to dat \

-s sim.data=examples/futil/memory-by-reference/memory-by-reference.futil.data

Multi-dimensional Memories

Not much changes for multi-dimensional arrays. The only additional step is adding

the corresponding address ports. For example, a 2-dimensional memory will require address ports

addr0 and addr1. More generally, an N-dimensional memory will require address ports

addr0, …, addr(N-1).

Multiple Memories

Similarly, multiple memories will just require the ports to be passed for each of the given memories.

Here is an example of a memory copy (referred to as mem_cpy in the C language), with 1-dimensional memories of size 5:

import "primitives/core.futil";

import "primitives/memories/comb.futil";

component copy(dest_done: 1, src_read_data: 32, length: 3) ->

(dest_write_data: 32, dest_write_en: 1, dest_addr0: 3, src_addr0: 3) {

cells {

lt = std_lt(3);

N = std_reg(3);

add = std_add(3);

}

wires {

comb group cond {

lt.left = N.out;

lt.right = length;

}

group upd_index<"static"=1> {

add.left = N.out;

add.right = 3'd1;

N.in = add.out;

N.write_en = 1'd1;

upd_index[done] = N.done;

}

group copy_index_N<"static"=1> {

src_addr0 = N.out;

dest_addr0 = N.out;

dest_write_en = 1'd1;

dest_write_data = src_read_data;

copy_index_N[done] = dest_done;

}

}

control {

while lt.out with cond {

seq {

copy_index_N;

upd_index;

}

}

}

}

component main() -> () {

cells {

@external(1) d = comb_mem_d1(32,5,3);

@external(1) s = comb_mem_d1(32,5,3);

length = std_const(3, 5);

copy0 = copy();

}

wires {

}

control {

seq {

invoke copy0(dest_done=d.done, src_read_data=s.read_data, length=length.out)

(dest_write_data=d.write_data, dest_write_en=d.write_en, dest_addr0=d.addr0, src_addr0=s.addr0);

}

}

}

Calyx Language Reference

Top-Level Constructs

Calyx programs are a sequence of import statements followed by a sequence of

extern statements or component definitions.

import statements

import "<path>" has almost exactly the same semantics to that of #include in

the C preprocessor: it copies the code from the file at path into

the current file.

extern definitions

extern definitions allow Calyx programs to link against arbitrary RTL code.

An extern definition looks like this:

extern "<path>" {

<primitives>...

}

<path> should be a valid file system path that points to a Verilog module that

defines the same names as the primitives defined in the extern block.

When run with the -b verilog flag, the Calyx compiler will copy the contents

of every such Verilog file into the generated output.

primitive definitions

The primitive construct allows specification of the signature of an external

Verilog module that the Calyx program uses.

It has the following syntax:

[comb] primitive name<attributes>[PARAMETERS](ports) -> (ports);

The syntax for primitives resembles that for components, with some additional pieces:

- The

combkeyword signals that the primitive definition wraps purely combinational RTL code. This is useful for certain optimizations. - Attributes specify useful metadata for optimization.

- PARAMETERS are named compile-time (metaprogramming) parameters to pass to

the Verilog module definition. The primitive definition lists the names of

integer-valued parameters; the corresponding Verilog module definition should

have identical

parameterdeclarations. Calyx code provides values for these parameters when instantiating a primitive as a cell. - The ports section contain sized port definitions that can either be positive number or one of the parameter names.

For example, the following is the signature of the std_reg primitive from the

Calyx standard library:

primitive std_reg<"state_share"=1>[WIDTH](

@write_together(1) @data in: WIDTH,

@write_together(1) @interval(1) @go write_en: 1,

@clk clk: 1,

@reset reset: 1

) -> (

@stable out: WIDTH,

@done done: 1

)

The primitive defines one parameter called WIDTH, which describes the sizes for

the in and the out ports.

Inlined Primitives

Inlined primitives do not have a corresponding Verilog file, and are defined within Calyx. The Calyx backend then converts these definitions into Verilog.

For example, the std_unsyn_mult primitive is inlined:

comb primitive std_unsyn_mult<"share"=1>[WIDTH](left: WIDTH, right: WIDTH) -> (out: WIDTH) {

assign out = left * right;

};

This can be useful when a frontend needs to generate both Calyx and Verilog code at the same time. The backend ensures that the generated Verilog module has the correct signature.

Calyx Components

Components are the primary encapsulation unit of a Calyx program. They look like this:

component name<attributes>(ports) -> (ports) {

cells { ... }

wires { ... }

control { ... }

}

Like primitive definitions, component signatures consist of a name, an optional list of attributes, and input/output ports.

Unlike primitives, component definitions do not have parameters; ports must have a concrete (integer) width.

A component encapsulates the control and the hardware structure that implements

a hardware module.

Well-formedness: The

controlprogram of a component must take at least one cycle to finish executing.

Combinational Components

Using the comb keyword before a component definition marks it as a purely combinational component:

comb component add(left: 32, right: 32) -> (out: 32) {

cells {

a = std_add(32);

}

wires {

a.left = left;

a.right = right;

out = a.out;

}

}

A combinational component does not have a control section, can only use other comb components or primitives, and performs its computation combinationally.

Ports

A port definition looks like this:

[@<attr>...] <name>: <width>

Ports have a bit width but are otherwise untyped. They can also include optional attributes. For example, this component definition:

component counter(left: 32, right: 32) -> (@stable out0: 32, out1: 32) { .. }

defines two input ports, left and right, and two output ports,

out0 and out1.

All four ports are 32-bit signals.

Additionally, the out0 port has the attribute @stable.

cells

A component’s cells section instantiates a set of sub-components.

It contains a list of declarations with this syntax:

[ref]? <name> = <comp>(<param...>);

Here, <comp> is the name of an existing primitive or component definition, and

<name> is the fresh, local name of the instance.

The optional ref parameter turns the cell into a by-reference cell.

Parameters are only allowed when instantiating primitives, not Calyx-defined components.

For example, the following definition of the counter component instantiates a

std_add and std_reg primitive as well as a foo Calyx component

component foo() -> () { ... }

component counter() -> () {

cells {

r = std_reg(32);

a = std_add(32);

f = foo();

}

wires { ... }

control { ... }

}

When instantiating a primitive definition, the parameters are passed within the

parenthesis.

For example, we pass 32 for the WIDTH parameter of the std_reg in the above

instantiation.

Since an instantiation of a Calyx component does not take any parameters, the parameters

are always empty.

The wires Section

A component’s wires section contains guarded assignments that connect ports

together. The assignments can either appear at the top level, making them

continuous assignments, or be organized into named

group and comb group definitions.

Guarded Assignments

Assignments connect ports between two cells together, with this syntax:

<cell>.<port> = [<guard> ?] <cell>.<port>;

The left-hand and right-hand side are both port references, which name a specific input or output port within a cell declared within the same component. The optional guard condition is a logical expression that determines whether the connection is active.

For example, this assignment:

r.in = add.out;

unconditionally transfers the value from a port named out in the add cell to r’s in port.

Assignments are simultaneous and non-blocking. When a block of assignments runs, they all first read their right-hand sides and then write into their left-hand sides; they are not processed in order. The result is that the order of assignments does not matter. For example, this block of assignments:

r.in = add.out;

add.left = y.out;

add.right = z.out;

is a valid way to take the values from registers y and z and put the sum into r. Any permutation of these assignments is equivalent.

Guards

An assignment’s optional guard expression is a logical expression that produces a 1-bit value, as in these examples:

r.in = cond.out ? add.out;

r.in = !cond.out ? 32'd0;

Using guards, Calyx programs can assign multiple different values to the same

port. Omitting a guard expression is equivalent to using 1'd1 (a constant

“true”) as the guard.

Guards can use the following constructs:

port: A port access on a defined cell, such ascond.out, or a literal, such as3'd2.port op port: A comparison between values on two ports. Valid instances ofopare:>,<,>=,<=,==!guard: Logical negation of a guard valueguard | guard: Disjunction between two guardsguard & guard: Conjunction of two guards

Well-formedness: For each input port on the LHS, only one guard should be active in any given cycle during the execution of a Calyx program.

Continuous Assignments

When an assignment appears directly inside a component’s wires section, it

is called a continuous assignment and is permanently active, even when the

control program of the component is inactive.

group definitions

A group is a way to name a set of assignments that together represent some

meaningful action:

group name<attributes> {

assignments...

name[done] = done_cond;

}

Assignments within a group can be reasoned about in isolation from assignments in other groups. Unlike continuous assignments, a group’s encapsulated assignments only execute as dictated by the control program. This means that seemingly conflicting writes to the same ports are allowed:

group foo {

r.in = 32'd10;

foo[done] = ...;

}

group bar {

r.in = 32'd22;

bar[done] = ...;

}

However, group assignments must not conflict with continuous assignments defined in the component:

group foo {

r.in = 32'd10; ... // Malformed because it conflicts with the write below.

foo[done] = ...

}

r.in = 32'd50;

Groups can take any (nonzero) number of cycles to complete. To indicate to the

outside world when their execution has completed, every group has a special

done signal, which is a special port written as <group>[done]. The group

should assign 1 to this port to indicate that its execution is complete.

Groups can have an optional list of attributes.

Well-formedness: All groups are required to run for at least one cycle. (Sub-cycle logic should use

comb groupinstead.)

comb group definitions

Combinational groups are a restricted version of groups which perform their computation purely combinationally and therefore run for “less than one cycle”:

comb group name<attributes> {

assignments...

}

Because their computation is required to run for less than a cycle, comb group

definitions do not specify a done condition.

Combinational groups cannot be used within normal control

operators.

Instead, they only occur after the with keyword in a control program.

The Control Operators

The control section of a component contains an imperative program that

describes the component’s behavior. The statements in the control program

consist of the following operators:

Group Enable

Simply naming a group in a control statement, called a group enable, executes the group to completion. This is a leaf node in the control program.

invoke

invoke acts like the function call operator for Calyx and has the following

syntax:

invoke instance[ref cells](inputs)(outputs) [with comb_group];

instanceis the name of the cell instance that needs to be invoked.inputsandoutputsdefine connections for a subset of input and output ports of the instance.- The

with comb_groupsection is optional and names a combinational group that is active during the execution of theinvokestatement. ref cellsis a list of cell names to pass to the invoked component’s required cell reference. (It can be omitted if the invoked component contains no cell references.)

Invoking an instance runs its control program to completion before returning.

Any Calyx component or primitive that implements the go-done interface can be invoked.

Like the group enable statement, invoke is a leaf node in the control program.

seq

The syntax for sequential composition is:

seq { c1; ...; cn; }

where each ci is a nested control statement.

Sequences run the control programs c1…cn in sequence, guaranteeing that

each program runs fully before the next one starts executing.

seq does not provide any cycle-level guarantees on when a succeeding

group starts executing after the previous one finishes.

par

The syntax for parallel composition is:

par { c1; ...; cn; }

The statement runs the nested control programs c1…cn in parallel, guaranteeing that

each program only runs once.

par does not provide any guarantees on how the execution of child programs

is scheduled.

It is therefore not safe to assume that all children begin execution at the

same time.

Well-formedness: The assignments in the children

c1…cnshould never conflict with each other.

if

The syntax is:

if <port> [with comb_group] {

true_c

} else {

false_c

}

The conditional execution runs either true_c or false_c using the value of

<port>.

The optional with comb_group syntax allows running a combinational group

that computes the value of the port.

Well-formedness: The combinational group is considered to be running during the entire execution of the control program and therefore should not have conflicting assignments with either

true_corfalse_c.

while

The syntax is:

while <port> [with comb_group] {

body_c

}

Repeatedly executes body_c while the value on port is non-zero.

The optional with comb_group enables a combinational group that computes the

value of port.

Well-formedness: The combinational group is considered active during the execution of the while loop and therefore should not have conflicting assignments with

body_c.

repeat

The syntax is:

repeat <num_repeats> {

body_c

}

Repeatedly executes the control program body_c num_repeat times in a row.

The go-done Interface

By default, Calyx components implement a one-sided ready-valid interface called

the go-done interface.

During compilation, the Calyx compiler will add an input port marked with the attribute @go and an output port marked with the attribute @done to the interface of the component:

component counter(left: 32, right: 32, @go go: 1) -> (out: 32, @done done: 1)

The interface provides a way to trigger the control program of the counter using

assignments.

When the go signal of the component is set to 1, the control program starts

executing.

When the component sets the done signal to 1, its control program has finished

executing.

Well-formedness: The

gosignal to the component must remain set to 1 while the done signal is not 1. Lowering thegosignal before thedonesignal is set to 1 will lead to undefined behavior.

The clk and reset Ports

The compiler also adds special input ports marked with @clk and @reset to the

interface.

By default, Calyx components are not allowed to look at or use these signals.

They are automatically threaded to any primitive that defines @clk or

@reset ports.

Advanced Concepts

ref cells

Calyx components can specify that a cell needs to be passed “by reference”:

// Component that performs mem[0] += 1;

component update_memory() -> () {

cells {

ref mem = comb_mem_d1(...)

}

wires { ... }

control { ... }

}

When invoking such a component, the calling component must provide a binding for each defined cell:

component main() -> () {

cells {

upd = update_memory();

m1 = comb_mem_d1(...);

m2 = comb_mem_d2(...);

}

wires { ... }

control {

seq {

invoke upd[mem=m1]()(); // Pass `m1` by reference

invoke upd[mem=m2]()(); // Pass `m2` by reference

}

}

}

As the example shows, each invocation can take different bindings for each ref cell.

In the first invocation, we pass in the concrete cell m1 while in the second we pass

in m2.

See the tutorial for longer example on how to use this feature.

Subtyping for ref cells

When providing bindings for ref cells, one must provide a concrete cell that is a

subtype of the ref cell. A cell a is a subtype of cell b if the component

defining a has at least the same ports as the component defining b.

Consider the following component definitions:

component b(in_1 : 1) -> (out_1 : 1) {

// cells, wires, and control blocks

}

component a(in_1 : 1, in_2 : 1) -> (out_1 : 1, out_2 : 1){

// cells, wires, and control blocks

}

Because the component definition of a has the ports in_1 and out_1, a concrete cell of a can be bound to a ref cell of component b:

//Expects a `ref` cell of component `b`

component c() -> () {

cells{

ref b1 = b();

}

wires{...}

control{...}

}

component main() -> () {

cells {

c_cell = c();

b_cell = b();

a_cell = a(); //recall `a` is a subtype of `b`

}

wires { ... }

control {

seq {

// Pass `b_cell` by reference. Both are `b1` and `b_cell` are defined by the component `b`

invoke c[b1=b_cell]()();

// Pass `a_cell` by reference. The `ref` cell and concrete cell are defined by different components,

// but this is allowed because `a` is a subtype of `b`.

invoke c[b1=a_cell]()();

}

}

}

Ports are considered to be equal with respect to subtyping if they have the same name, width, direction, and attributes.

Note: The notion of subtyping described above, that only checks for port equivalence between components, is incomplete. A complete, correct definition of subtyping would require that for

ato be a subtype ofb, for everyrefcell expected ina, componentbmust expect arefcell that is a subtype of the associatedrefcell ina(note that the relationship between these nestedrefcells is opposite the relationship ofaandb).Because nested

refcells are not currently allowed in Calyx, this is not a problem in practice.

Data Format

Calyx’s fud2-based workflows specifies a JSON-based data format which can be used with software simulators.

External memories

First, a Calyx program must mark memories using the @external attribute to tell the compiler that the memory is used as either an input or an ouput.

component main() {

cells {

@external ext = comb_mem_d1(...);

internal = comb_mem_d1(...);

}

}

In the above program, the memory ext will be assumed to be an input-output memory by the compiler while internal is considered to be an internal memory.

For all external memories, the compiler will generate code to read initial values and dump out final values.

The external attribute is recognized for all the std_mem and seq_mem primitives.

The @external attribute can only be used on the top-level component.

When the

--synthesisflag is passed to the compiler, this behavior is disabled and instead the memories are turned into ports on the top-level component.

The Data Format

The JSON-based data format allows us to provide inputs for a memory and specify how the values should be interpreted:

{

"mem": {

"data": [10],

"format": {

"numeric_type": "bitnum",

"is_signed": false,

"width": 32

}

}

}

The data field represents the initial data for the memory and can use mutlidimensional arrays to describe it. For example, the following is the initial data for a two-dimensional (comb_mem_d2) memory:

{

"data": [ [1, 2], [3, 4] ],

"format": {...}

}

The format specifier tells fud how to interpret the values in the data field. The original program specifies that all values should be treated as 32-bit, unsigned values.

In order to specify fixed-point values, we must specify both the total width and fraction widths:

"root": {

"data": [

0.0

],

"format": {

"numeric_type": "fixed_point",

"is_signed": false,

"width": 32,

"frac_width": 16

}

}

The format states that all values have a fractional width of 16-bits while the remainder is used for the integral part.

Note:

fudrequires that for each memory marked with the@externalattribute in the program.

Using fud

All software simulators supported by fud, including Verilator and Icarus Verilog, as well as the Calyx interpreter can use this data format.

To pass a JSON file with initial values, use the -s verilog.data flag:

# Use Icarus Verilog

fud2 --to dat --through icarus <CALYX FILE> -s sim.data=<JSON>

# Use Verilator

fud2 --to dat --through verilator <CALYX FILE> -s sim.data=<JSON>

# Use Cider, the Calyx interpreter

fud2 --to dat --through cider <CALYX FILE> -s sim.data=<JSON>

Generating Random Values

Often times, it can be useful to automatically generate random values for a large memory. The data-gen tool takes a Calyx program as an input and automatically generates random values for each memory marked with @external in the above data format.

Static Timing

The features discussed below have been available since Calyx version 0.2.1.

By default, Calyx programs use a latency-insensitive, or dynamic, model of computation. This means that the compiler does not know, track, or guarantee the number of cycles it takes to perform a computation or run a control operator. This is in contrast to a latency-sensitive, or static, model of computation, where the number of cycles a component needs is known to, and honored by, the compiler.

In general, latency-insensitivity makes it easier to compose programs. It grants the compiler freedom to schedule operators however it wants, as long as the schedule meets the program’s dataflow constraints. It also prevents code from implicitly depending on the state of other code running in parallel.

However, there are two drawbacks to this approach. First, the generated hardware may not be efficient: if the compiler does not know how long computations take, it must schedule them conservatively. Second, it is impossible for latency-insensitive programs to interact with latency-sensitive hardware implemented in RTL; this means that the use of black-box hardware designs requires costly handshaking logic at the interface.

To address these issues, Calyx provides a static qualifier that modifies components and groups, along with static variants of other control operators.

Broadly, the static qualifier is a promise to the compiler that the specifed component or group will take exactly the specified number of cycles to execute.

The compiler is free to take advantage of this promise to generate more efficient hardware.

In return, the compiler must access out-ports of static components only after the specified number of cycles have passed, or risk receiving incorrect results.

Static Constructs in the Calyx IL

We will now discuss the static constructs available in the Calyx IL, along with the guarantees they come with.

Static Components

Briefly consider a divider component, std_div_pipe, which divides the value left by the value right and puts the result, which is in two parts, in two out ports.

This component is dynamic; its latency is unknown.

primitive std_div_pipe[W](go: 1, left: W, right: W) -> (out_remainder: W, out_quotient: W, done: 1);

A client of the divider must pass two inputs left and right, raise the go signal, and wait for the component itself to raise its done signal.

The client can then read the result from the two out ports.

That is, it obeys the go-done interface.

Compare this to a multiplier component, std_mult_pipe, which has a similar signature but whose latency is known to be three cycles.

We declare it as follows:

static<3> primitive std_mult_pipe[W](go: 1, left: W, right: W) -> (out: W);

The fact that the multiplier has one out port while the divider has two is not relevant to the discussion.

The differences that do matter are:

- The

staticqualifier is used to declare the component as static and to specify its latency (3 cycles). - The

doneport is absent from the multiplier’s signature.

A client of the multiplier must pass two inputs and raise the go signal as before.

However, the client need not then wait for the component to indicate completion.

The client can simply and safely assume that the result will be available after 3 cycles.

This is a guarantee that the author of the component has made to the client, and the client is free to take advantage of it.

Static Groups and Relative Timing Guards

Much like components, groups can be declared as static. Since groups are just unordered sets of assignments, it pays to have a little more control over the scheduling of the assignments within a group. To this end, static groups have a unique feature that ordinary dynamic groups do not: relative timing guards.

Consider this group, which multiplies 6 and 7 and stores the result in ans.

static<4> group mult_and_store {

mult.left = %[0:3] ? 6;

mult.right = %[0:3] ? 7;

mult.go = %[0:3] ? 1;

ans.in = %3 ? mult.out;

ans.write_en = %3 ? 1;

}

The static<4> qualifier specifies that the group should take 4 cycles to execute.

The first three assignments are guarded (using the standard ? separator) by the relative timing guard %[0:3].

In general, a relative timing guard %[i:j] is true in the half-open interval from cycle i to

cycle j of the group’s execution and false otherwise.

In our case, the first three assignments execute only in the first three cycles of the group’s execution.

The guard %3, which we see immediately afterwards, is syntactic sugar for %[3:4].

We have used it in this case to ensure that the last two assignments execute only in the last cycle of the group’s execution.

Static Control Operators

Calyx provides static variants of each of its control operators.

While dynamic commands may contain both static and dynamic children, static commands must only have static children.

In the examples below, assume that A5, B6, C7, and D8 are static groups with latencies 5, 6, 7, and 8, respectively.

static seq, a static version of seq

If we have static seq { A5; B6; C7; D8; }, we can guarantee that the latency of the entire operation is the sum of the latencies of its children: 5 + 6 + 7 + 8 = 26 cycles in this case.

We can also guarantee that each child will begin executing exactly one cycle after the previous child has finished.

In our case, for example, B6 will begin executing exactly one cycle after A5 has finished.

static par, a static version of par

If we have static par { A5; B6; C7; D8; }, we can guarantee that the latency of the entire operation is the maximum of the latencies of its children: 8 cycles in this case.

Further, all the children of a static par are guaranteed to begin executing at the same time.

The children can rely on this “lockstep” behavior and can communicate with each other.

Inter-thread communication of this sort is undefined behavior in a standard, dynamic, par.

As a corollary, consider this useful trick in the case when we need A5 and D8 to run in parallel, but we do not want them to start at the same time.

Instead, in order to support some inter-thread communication, we want A5 to start three cycles after D8.

static<3> group dummy_group { }

static par {

static seq { dummy_group; A5; }

D8;

}

We have not elided the body of dummy_group; it can literally be left blank.

During compilation, no hardware will be generated for dummy_group. It is simply a placeholder to delay the start of A5 by three cycles.

static if, a static version of if

If we have static if { A5; B6; }, we can guarantee that the latency of the entire operation is the maximum of the latencies of its children: 6 cycles in this case.

static repeat, a static version of repeat

If we have static repeat 7 { B6; }, we can guarantee that the latency of the entire operation is the product of the number of iterations and the latency of its child: 7 × 6 = 42 cycles in this case.

The body of a static repeat is guaranteed to begin executing exactly one cycle after the previous iteration has finished.

Calyx’s while loop is unbouded and so it does not have a static variant.

static invoke, a static version of invoke

Its latency is the latency of the invoked cell.

Experimental: Synchronization

Calyx’s default semantics do not admit any predictable form of language-level synchronization in presence of parallelism. We’re currently experimenting with a suite of new primitives that add synchronization to the language.

@sync attribute

Motivation

Consider the following control program in calyx:

par {

/// thread A

while lt.out with comp {

seq {

incr_idx;

add_r_to_accm;

}

}

/// thread B

while lt.out with comp {

seq {

incr_r;

}

}

}

where groups add_r_to_accm and incr_r reads value and increments value in register r, respectively, as indicated by their names.

Because calyx does not make any guarantee of the order of execution for threads running in parallel, it is impossible for us to determine which thread will access r first for each iteration.

Nondeterminism when running parallel threads is beneficial on the compiler’s end, as it will give the compiler more freedom for optimization. However, we sometimes do want to give parallel threads a measure of ordering while still taking advantage of the performance boost of parallelism. The @sync attribute allows us to do that.

Using the @sync attribute

Now we want to modify the program above so that in every iteration, thread A always reads after thread B finishes incrementing using the @sync attribute with the following:

par {

// thread A

while lt.out with comp {

seq {

incr_idx;

@sync(1);

add_r_to_accm;

@sync(2);

}

}

// thread B

while lt.out with comp {

seq {

incr_r;

@sync(1);

@sync(2);

}

}

}

First and foremost, always remember to import “primitives/sync.futil” when using the @sync attribute.

The @sync syntax can only be marked with empty statements. @sync means that the thread

marked with a certain value, now called barrier index, for this attribute, must stop and wait for all other threads marked with the same barrier index to arrive, at which point they can proceed.

In the modified program above, we see that incr_idx and incr_r must both finish in order for either thread to go forth. Because add_r_to_accm is executed after incr_idx in thread A, we know that in each iteration, incr_r will always increment r before add_r_to_accm reads it. We’ve also inserted another barrier at the end of the while loops for each thread, which essentially means add_r_to_accm has to finish before either thread enters the next iteration.

Synchronization in Branches

We can also have “barriers” in if branches:

par {

// thread 1

while lt.out with comp {

if eq.out with st_0 {

seq {

prod_0;

@sync(1);

switch_to_st_1;

@sync(2);

}

}

else {

seq {

prod_1;

@sync(1);

switch_to_st_0;

@sync(2);

}

}

}

// thread 2

while lt.out with comp {

seq {

@sync(1);

reg_to_mem;

incr_idx;

@sync(2);

}

}

}

}

In this control program, both branches of thread 1 have statements marked with @sync(1),

which syncs it up with thread 2.

Be really really careful when using the @sync attribute in conditional branches!

If the other thread sharing one “barrier” with your thread is blocked unconditionally,

then you would probably want to have the same @sync value in both branches; since

having the same @sync value in only one branch would likely lead to a “deadlock”

situation: if thread A is running in the unlocked branch while the thread B

has a “barrier” that is expecting two threads, thread B may never proceed because

thread A never arrives at the “barrier”.

More Complex Example

If you want to see a more complex design using @sync, see

sync-dot-product

Limitations

Currently we only support two threads sharing the same “barrier”, i.e., only two threads can have control with the @sync attribute marked with the same value.

Undefined Behaviors

Undefined behavior in Calyx is either intentional or unintentional. This page tracks the various issues discussing the undefined behaviors that are known to currently exist in Calyx.

Interface Signals

The go and done signals form the two core interface signals in Calyx. Their semantics are baked into the compiler and pervasively used to define the meaning of programs.

Undriven Ports

Calyx’s continuous assignments do not make any static guarantees about which ports need to be driven when. Current efforts attempt to codify when reading from an undriven port is incorrect.

Semantics of par

par blocks in Calyx represent parallel execution of groups. Currently, there is no clear semantics for interactions between groups executing in parallel. The interpreter implements a form of lockstep semantics that disallows certain forms of concurrent reads and writes while the code generated by the compiler allows for arbitrary communication.

Isolation Guarantees

Calyx groups have a strong isolation guarantee—they must execute for at least one cycle and guarantee that signals inside are not visible after they are done executing.

However, the isolation guarantees for combinational groups, continuous assignments, and with blocks is not clear.

Attributes

Calyx has an attribute system that allows information to be associated with every basic Calyx construct. This information can then be used to optimize the program or change how the program is compiled.

Attributes can decorate lots of things in Calyx: components, groups, cells, ports, and control statements.

The syntax looks like name<"attr"=value> for components and groups or @attr(value) for other constructs.

Attributes always map keys to values.

Because it’s common to have a “Boolean” attribute that always maps to the value 1, the syntax @attr is a shorthand for @attr(1).

Here is the syntax for attributes in different parts of the AST:

Component and Port Attributes

component main<"static"=10>(@go go: 1) -> (@done done: 1) {

...

}

Cell Attributes

cells {

@external mem = comb_mem_d1(32, 8, 4);

reg = std_reg(32);

...

}

Group Attributes

group cond<"promotable"=1> {

...

}

Control Attributes

control {

@promotable(3) seq {

@promotable(1) A;

@promotable(2) B;

}

}

Meaning of Attributes

toplevel

The entrypoint for the Calyx program. If no component has this attribute, then

the compiler looks for a component named main. If neither is found, the

compiler errors out.

go, done, clk and reset

These four ports are part of the interface to Calyx components.

These are automatically added by the parser if they are missing from the component definition.

go and done provide the mechanism for how an “outer” component invokes an “inner” cell that it contains.

clk and reset thread through the global clock and resetting signal in a design.

nointerface

By default, interface ports are automatically added to a component by the parser if they are missing. Adding this attribute disables this behavior.

external

The external attribute has meaning when it is attached to a cell.

It has two meanings:

- If the

externalizecompiler alias is enabled, the cell is turned into an “external” cell by exposing all its ports through the current component and rewriting assignments to the use the ports. See the documentation on externalize for more information. - If the cell is a memory and has an

externalattribute on it, the Verilog backend (-b verilog) generates code to read<cell_name>.datto initialize the memory state and dumps out its final value after execution.

static(n)

This is now deprecated. See the promotable and interval attributes.

promotable(n)

Can be attached to groups, control, and @go ports of components.

This tells the compiler how long the group/control would take if it were promoted

to static.

This is just a hint, and is free to be ignored by the compiler.

However, the compiler may use it in the static-promotion pass to upgrade dynamic

constructs to static<n> ones.

interval(n)

This can be attached to the @go ports of dynamic components (both primitives or

Calyx-defined components).

This tells the compiler that if you assert the @go port for cycles [0,n), then

the done signal will be asserted on cycle n.

This is different from @promotable since it provides a guarantee rather than a hint.

Attach @interval to @go ports essentially means that the component can serve

“double duty”: it can be used in both static and dynamic contexts.

This is common for things like registers.

inline

Used by the inline pass on cell definitions. Instructs the pass to completely

inline the instance into the parent component and replace all invokes of the

instance with the control program of the instance.

stable

Used by the canonicalize pass.

Only meaningful on output ports and states that their value is provided by

a sequential element and is therefore available outside combinational time.

For example, after invoking a multiplier, the value on its out port remains

latched till the next invocation.

For example

cells {

m = std_mult_pipe(32);

}

wires {

group use_m_out { // uses m.out }

}

control {

invoke m(left = 32'd10, right = 32'd4)();

use_m_out;

}

The value of m.out in use_m_out will be 32'd40.

This annotation is currently used by the primitives library and the Dahlia frontend.

share

Can be attached to a component and indicates that a component can be shared

across groups. This is used by the -p cell-share to decide which components

can be shared.

state_share

Can be attached to a component and indicates that a component can be shared

across groups. Different than share since state_share components can have

internal state.

This is used by -p cell-share to decide which components can be shared.

Specifically, a component is state shareable if each write to

that component makes any previous writes to the component irrelevant.

The definition of a “write to a component” is an activiation of

the component’s “go” port, followed by a read of the component’s “done” port (in

other words, the read of a “done” port still counts as part of a “write” to the

component).

For c1 and c2, instances of a state_shareable component:

instantiate c1 instantiate c2

any write to c1 any write to c2

write value v to port p in c1 write value v to port p in c2

c1 and c2 should be equal.

bound(n)

Used in infer-static-timing and static-timing when the number of iterations

of a While control is known statically, as indicated by n.

generated

Added by ir::Builder to denote that the cell was added by a pass.

clk

Marks the special clock signal inserted by the clk-insertion pass, which helps with lowering to RTL languages that require an explicit clock.

write_together(n)

Used by the papercut pass.

Defines a group n of signals that all must be driven together:

primitive comb_mem_d2<"static"=1>[WIDTH, D0_SIZE, D1_SIZE, D0_IDX_SIZE, D1_IDX_SIZE](

@write_together(2) addr0: D0_IDX_SIZE,

@write_together(2) addr1: D1_IDX_SIZE,

@write_together(1) write_data: WIDTH,

@write_together(1) @go write_en: 1,

...

) -> (...);

This defines two groups.

The first group requires that write_en and write_data signals together

while the second requires that addr0 and addr1 are driven together.

Note that @write_together specifications cannot encode implication of the

form “if port x is driven then y should be driven”.

read_together(n)

Used by papercut and canonicalize.

Defines a combinational path n between a set of an input ports and an output

port.

primitive comb_mem_d1<"static"=1>[WIDTH, SIZE, IDX_SIZE](

@read_together(1) addr0: IDX_SIZE, ...

) -> (

@read_together(1) read_data: WIDTH, ...

);

This requires that when read_data is used then addr0 must be driven.

Note that each group must have exactly one output port in it.

@data

Marks a cell or a port as a purely datapath component, i.e., the output does not propagate into a guard or another control signal. See this issue for the full set of constraints.

When we have following two conditions:

- An input port is marked with

@datain the component definitions, and - The cell instance is marked as

@data

The backend generates 'x as the default value for the assignment to the port instead of '0. Additionally, if the port has exactly one assignment, the backend removes the guard entirely and produces a continuous assignment.

This represents the optimization:

cells {

@data cell = cell_component();

}

wires {

cell.in = g ? out : 'x

}

into:

// cells are same as before

wires {

cell.in = out;

}

Since the value 'x can be replaced with anything.

(Currently Calyx does not support assignments of the form rhs = guard ? lhs1: lhs2,

so the syntax isn’t 100% accurate.

This optimization happens when translating from Calyx to Verilog.

At some point we may want to make this optimization happen during a Calyx pass.)

@data can also appear on the output ports of a Calyx component.

This represents when we want to optimize assignments to the port within a Calyx component.

Consider the following example:

component main() ->(...@data out_write_data: 32....) {

...

wires {

out_write_data = g ? out : 'x // we want to optimize to out_write_data = out

}

}

Note that we are trying to perform this optimization within the component, i.e.,

out_write_data is a port on the signature of the component itself, not an instance.

Also note that in this case, @data appears on the output port of the component’s signature.

When @data appears on an output port of a Calyx component, it means that assignments

to this port within the component will get the “default to 'x” semantics and optimizations.

Note that there is no requirement of having the “cell instance is marked as @data”,

as before.

@protected

Marks that the cell should not be removed or shared during optimization.

@pos

Contains the position IDs for source location(s) of the marked component/cell/group/control statement. Note that @pos is a set attribute (uses curly brace syntax) and can contain multiple values.

fud2: The Calyx Driver

fud2 is the compiler driver tool for orchestrating the Calyx ecosystem.

Working with Calyx involves a lot of command-line tools. For example, an incomplete yet daunting list of CLI tools used by Calyx is:

- All the Calyx [frontends][]

- Calyx compiler and its various command line tools

- Verilator, the Verilog simulation framework used to test Calyx-generated designs

- Waveform viewers to see the results of simulation

fud2 aims to provide a simple interface for using these toolchains and executing them in a pipeline. The source for fud2 is here.

“Original” fud was replaced by fud2 in a push to add some fundamental new capabilities and resolve some underlying problems with fud.

Set Up

If you already have Calyx installed, you can cargo install --path fud2 from this repository’s root to automatically add the binary to your path.

Alternatively, if you would like to work on development of fud2 and/or keep up with the latest changes when you git pull, you can build it along with everything else in this monorepo with cargo build.

You might then want to do something like ln -s `pwd`/target/debug/fud2 ~/.local/bin for easy access to the fud2 binary.

fud2 depends on Ninja. Install it using your OS package manager or by downloading a binary.

Configuration

Run the following command to edit fud2’s configuration file (usually ~/.config/fud2.toml):

fud2 edit-config

Add these lines:

[calyx]

base = "<path to calyx checkout>"

Environment Setup

Some parts of Calyx and fud2 require setting up and installing various python packages. With Python removing support for installing packages system wide, it’s recommended to install relevant packages into a python virtual environment. fud2 can set up this environment for you and instruct fud2 to automatically run relevant tools in the correct virtual environment.

To do this, simply run:

fud2 env init

There may be some cases where you want to manually interact with the python virtual environment. The virtual environment is installed to $XDG_DATA_HOME/fud2/venv (usually ~/.local/share/fud2/venv). You can activate the virtual environment in your current shell with:

fud2 env activate

Now you’re ready to use fud2.

General Use

You can see complete command-line documentation with fud2 --help.

But generally, you want to do something like this:

fud2 <IN> -o <OUT>

For example, use this to compile a Calyx program to Verilog:

fud2 foo.futil -o bar.sv

fud2 tries to automatically guess the input and output formats using filename extensions.

If that doesn’t work, you can choose for it with --from <state> and --to <state>;

for example, this is a more explicit version of the above:

fud2 foo.futil -o bar.sv --from calyx --to verilog

You can also omit the input and output filenames to instead use stdin and stdout.

In that case, --from and --to respectively are required.

So here’s yet another way to do the same thing:

fud2 --from calyx --to verilog < foo.futil > bar.sv

This is handy if you just want to print the result of a build to the console:

fud2 foo.futil --to verilog

Some operations use other configuration options, which can come from either your fud2.toml or the command line.

Use --set key=value to override any such option.

Advanced Options

Use fud2 --help for an overview of the command-line interface.

Here are some options you might need:

- By default,

fud2runs the build in a directory called.fud2within the working directory. It automatically deletes this directory when the build is done.- It can be useful to keep this build directory around for debugging or as a “cache” for future builds. Use

--keepto preventfud2from deleting the build directory. - You can also tell

fud2to use a different build directory with--dir. If you give it an existing directory, it will never be deleted, even without--keep. (Only “fresh” build directories are automatically cleaned up.)

- It can be useful to keep this build directory around for debugging or as a “cache” for future builds. Use

- If you don’t like the operation path that